Sentino – Methodology for Personality Profiling

Written by: PhD Deniss Stepanovs

Abstract

This article presents Sentino, an innovative solution that combines classical psychological testing with advanced semantic analysis of textual data and intelligent chatbots for personality assessment. First, a multidimensional psychological vector space was created based on the vast dataset of more than 5,000 psychological statements (items). Second, various data augmentation strategies were employed to enhance the dataset’s completeness. Afterwards, the transformer-based NLP model was trained and fine-tuned on this data. In this publication, the advantages of semantic analysis over lexical analysis are briefly discussed. The Sentino API is showcased in real-world scenarios, including classical testing, text and interview processing, and chatbot-driven interactions, highlighting its versatility and applicability. Limitations related to data, NLP models, approach, and application are addressed. This article provides a comprehensive overview of Sentino’s methodology, data, and real-world applications in the field of personality assessment.

1 Introduction

The exploration of human personality has always been important, but this problem has become most urgent today, in the era of personalization. The classical approach to personality assessment, which relies on long questionnaires, is in need of a fresh perspective. Traditional methods often involve asking individuals numerous closed-ended questions, which can be limited in capturing the complexities of human personality.

Currently, we have access to cutting-edge tools and technologies that are able to revolutionize the way we explore and understand personality, its dimensions, traits, and facets. These advancements include:

- The ability to conduct large-scale personality questionnaires, allowing us to gather data from a diverse and extensive range of individuals.

- Automation of the analysis process, reducing the need for manual assessment and enabling the exploration of open-ended questions and unstructured textual data.

- Utilization of state-of-the-art Natural Language Processing (NLP) stack that bridges the gap between human expression and machine understanding and allows for analyzing and interpreting personality-related content in a more nuanced and comprehensive manner.

The advancements mentioned above have marked the beginning of a new era in personality psychology, the new level of data-driven efficacy and nuanced understanding. In the following sections, we will dive into Sentino’s methodology, which employs these innovative approaches to human personality. This paper has the following structure: Section 2 is devoted to the data and its crucial role in Sentino’s solution; in Section 3 we discuss the methodology for personality assessment; Section 4 gives an overview of practical applications of Sentino’s methodology in various contexts; in the “Conclusion” the contribution to personality assessment and implications are summarized.

2 Data

In this section we discuss the dataset that forms the robust psychological basis for the whole Sentino’s analysis process.

2.1 Golden Data Set

Sentino’s data-driven approach to personality assessment relies on comprehensive datasets and advanced analysis techniques. We started from the incorporation of vast datasets from different sources that cover more than 20 widely used and scientifically proven personality questionnaires like Big Five, NEO, HEXACO, ORVIS, etc. The complete list of inventories and related scientific publications is available in the dedicated section of our website.

These inventories represent the foundation of a carefully curated collection of around 5,000 psychological statements, commonly known as items. These statements were designed to capture the vast spectrum of personality facets, essentially representing the entirety of the human psyche. Each item is backed by data from 1,000 – 500,000 respondents. This approach allows for a profound understanding of the interrelationships both among individual items and between these items and personality questionnaires (inventories). Using the data (5,000 items), we constructed the correlation matrix. We assigned a vector for each item such that the dot-product of the vectors was equal to correlation between the items. These item-vectors constituted the psychological vector space (see Section 3.2).

It’s important to note that we adopted all the existing techniques and commonly used psychometrics. Furthermore, we did not introduce any know-how at this early phase. The validity and reliability of the selected items have been robustly shown by major psychological studies, from which they were derived, and further supported by corresponding scientific publications [1, 2, 3, 4].

2.2 Data Augmentation

However, the pool size of 5,000 items is not sufficient to encompass the entire range of natural language diversity. Therefore, the need for data augmentation arises.

Our fundamental approach to data augmentation involves the creation of a parallel corpus focused on item rephrasing (Table 1). This approach involves generating alternative phrasings of existing items to enhance the variety of expressions captured. Normally, each item receives 10 to 50 rephrasings.

Table 1. Examples of Item Rephrasing

| Initial Item | Rephrased Item |

| I like to go out. | I frequently attend parties. |

| I love to learn new things. | I’m excited about learning new things. |

| I am an orderly person. | I am a well-organized person. |

Moreover, we are actively expanding our dataset to include both closed-ended and open-ended questions. This augmentation encompasses a range of question types, from straightforward binary inquiries to more open-ended queries, to which individuals can provide detailed responses.

Table 2. Question Types Used for Dataset Expansion

| Question Type | Question | Possible Answer |

| Binary (Yes-No) question | Do you like to go out? | A) Yes B) No |

| Closed-ended question | Do you prefer predictability, or are you spontaneous? | A) I prefer predictability. B) I am spontaneous. |

| Open-ended question | How do you spend your free time? | I enjoy socializing at parties and exploring various recreational activities. |

Through these data augmentation strategies, we aim to enhance the comprehensiveness and versatility of our dataset, allowing it to more effectively capture the nuances and breadth of natural language expressions related both to personality and cultural differences.

Data augmentation was carried out in several successive stages:

- Crowdsourcing with the rephrasing purpose resulting in an extended data pool of up to 50,000–70,000 items.

- Application of GPT-like technology for automatic data generation, yielding an impressive pool of up to 500,000 items.

A significant fraction of this generated data was reviewed by humans (see Section 2.3 below).

2.3 Quality Assurance and Possible Limitations

At the next phase, a rigorous quality assurance process was implemented. Most of the automatically generated data was subjected to meticulous human check and evaluation to ensure its accuracy and credibility.

The following limitations were identified during the evaluation process:

- Presence of a considerable number of repetitive items.

These items needlessly expand the final dataset, while providing low value. - Potential concerns regarding the authenticity of some generated items.

Automatically generated data may exhibit characteristics of artificial language rather than natural human expression. - Inaccurate reflection of subtleties of human language use.

Automatically generated data might not capture all the minor nuances of human language.

That’s why research and data development represent an ongoing and dynamic process. We continually engage in it both to verify and update our existing dataset and to improve the final models.

2.4 Resulting Dataset

The data collection and augmentation processes resulted in a vast dataset that forms a solid basis for further analysis and comprises the following components (Table 2):

- Classical psychological items (also known as sentences or statements)

- Closed-ended questions

- Open-ended questions.

This expanded dataset (500,000 items) was used for extensive and precise training of our AI model. As a result, the model is capable of searching texts and interviews for similar items and mapping them with the initial dataset. Our commitment to maintaining a dynamic dataset means continual engagement in the research and data development process. This ongoing effort ensures that our dataset remains accurate, relevant, and comprehensive.

3 Method

This section reveals Sentino’s methodology that relies on semantic text analysis. This analysis method offers distinct advantages over lexical analysis by empowering state-of-the-art models for a deeper understanding of textual content.

We have developed a psychological vector space that encompasses items and personality aspects, enabling precise analysis and correlation calculations. We employ the modern and powerful technological stack. However, we still highlight the importance of ongoing research in text analysis due to the evolving complexities of language. We process the text in the following way:

- Break the text into individual items, such as sentences or question-and-answer pairs

- Project these items into a multidimensional psychological vector space

- Calculate scores and quantiles for items

- Provide confidence intervals as part of our analysis.

3.1 Semantic vs Lexical Analysis

Lexical analysis primarily focuses on individual words, their isolated meaning, and frequency of their usage.Due to the focus on individual words and phrases, it can struggle to capture the context and subtleties of language. This limitation often results in lower reliability, as it may misinterpret the intended meaning of words in different contexts. Additionally, lexical analysis might not provide a strong correlation with the actual sentiment or intent behind the text, making it less effective in tasks that require a deeper understanding of natural language. Possibly, this was one of the reasons that forced IBM Watson Personality Insights to terminate their services.

Sentino’s entire methodology is based on semantic text analysis. Semantic analysis delves deeper into the context and relationships among words, facilitating a more comprehensive understanding of text. This includes capturing nuances, disambiguating word senses, and discerning the underlying sentiment conveyed by the words. Additionally, semantic analysis is effective in grasping idiomatic expressions and broader meanings, making it exceptionally effective in natural language understanding. Semantic text analysis goes beyond surface-level word examination, providing a more profound and precise interpretation of textual content. However, it’s important to note one limitation associated with semantic analysis. If any meaning or information is absent from the original text being analyzed, it will not be reflected in the final results.

Therefore, today’s state-of-the-art NLP stack (namely Transformer-based models) are designed to capture the semantics well. Currently, these models play a crucial role in natural language processing. Consequently, Sentino employs Transformer-based models to bridge the text and its psychological meaning.

3.2 Psychological Vector Space

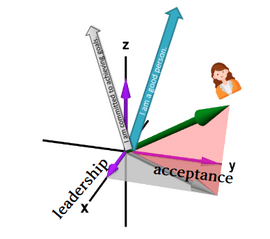

The core of Sentino’s model lies in the psychological vector space (Fig. 1), a multidimensional representation that encompasses both items and personality aspects of individuals. In this space, every item is represented by a vector, usually of 128/256 dimensions. These vectors are obtained by the decomposition of a correlation matrix (between the items) in the following way: the dot-product between the items must equal correlation between these items.

Fig. 1. Psychological Vector Space

All the personality inventories outlined in Section 2.1, along with their respective dimensions (facets), are also present in this space in the form of vectors. Furthermore, each person can be seen as a vector, a composite “sum” of various pieces of information, encompassing personality traits, behaviors, and attributes.

The vector space is designed in a way that items, facets, or individuals with close semantic meanings correspond to similar vectors within this space. All 5,000 items are projected in a manner that ensures their similarity or correlation is well-preserved within this space.

Example:

Product of vectors v1 * v2 = correlation of psychological items 1 and 2

As described in the following sections, the accuracy of projection is ensured by our dedicated NLP model.

3.3 NLP Model

In this section, we describe the NLP stack that has been employed for conducting the psychological projection, described before.

We utilized a pretrained transformer-based NLP model, namely sentence-transformers/all-MiniLM-L6-v2. This model was originally trained on more than 1 billion sentences (of various kinds). Despite its rather small size, it produces high-quality embedding vectors and demonstrates remarkable performance across various NLP tasks. This model allows for keeping the training and evaluation processes very agile.

Our approach involves two major phases:

- Fine-tuning the pretrained model (all-MiniLM-L6-v2) on the augmented parallel corpus (about 500,000 item pairs), and

- Final tuning on a smaller golden dataset for teaching the exact projections to the psy vector space

Fine-tuning on the parallel corpus. As discussed above, we have augmented the golden dataset to about 500,000 item pairs. This corpus includes both positive (parallel, synonymic pairs, e.g. “I am extraverted” and “I like to go out”) and negative (non-parallel, antonymic pairs, e.g. “I am extraverted” and “I don’t like to go out”) examples. Positive examples dominate within the dataset and constitute about 85%. Therefore, we used loss function balancing technique, providing higher loss for negative samples than for positive ones.

Fine-tuning on the golden dataset. Once we fine-tuned the model on the parallel corpus, we continued with its training on the golden dataset. This allowed for the model fine-tuning directly to the final task of projecting the text into the psychological space. This set of items is significantly smaller (about 50,000–100,000). However each of them is directly linked to the vectors that constitute the personality vector space (usually of 128/256 dimensions).

Technically, both fine-tuning steps are performed simultaneously. We carefully optimize our model for both of the above-mentioned tasks at the same time. This ensures the best possible model quality.

The average pairwise similarity (that corresponds to the correlation) for the parallel corpus is about 0.6. This ensures that the psychological content of the text is captured well by the model. The average similarity between embedding vectors coming from the model and golden set is about 0.7. This ensures that the text projection to the psychological space preserves the relative meaning (similarity, correlation) between different items.

Challenges. It is generally understood that no model is perfect. We usually see that models are not able to capture very complex sentences (e.g. double negation, metaphors etc.) in certain cases. We approach this by augmenting the data with extra items of the problematic kind. It is also clear that no dataset is perfect. Once the model is trained, we always run all the available examples through it and look if there are any obvious mistakes made by the model. Frequently, the model itself precisely points to the existing problem within the dataset that we immediately fix.

3.4 Text and Interview Processing

In the text and interview processing phase, we employ a structured approach to transform textual data into valuable insights related to personality traits. This process unfolds in the following steps:

- Text Chunking.

We begin by breaking down the input text into smaller chunks. These chunks can consist of individual sentences or question-answer pairs, both of which we refer to as “items”. - Vector Space Projection.

Afterwards, we use our Transformer-based NLP model to project each item into the multidimensional psychological vector space that we’ve established (Section 3.2). This space encompasses both the psychological items and the personality aspects of individuals, represented as vectors. - Inventory Alignment.

We seek to align the item projections with known psychological inventories, such as those mentioned in Section 2.1. By doing so, we can associate the content of the text with specific personality dimensions, traits, and facets. - Score Calculation.

To quantify the relevance and strength of the connection between items and each personality trait, we calculate a score. This score is generated by summing the projections of the item onto the vectors associated with the relevant inventories. - Quantile Determination.

We utilize score distributions within our known population sample to determine quantiles. This step helps us assess where a particular score falls in relation to the broader population’s distribution. Quantiles offer valuable context for understanding the significance of the observed scores. - Confidence Estimation.

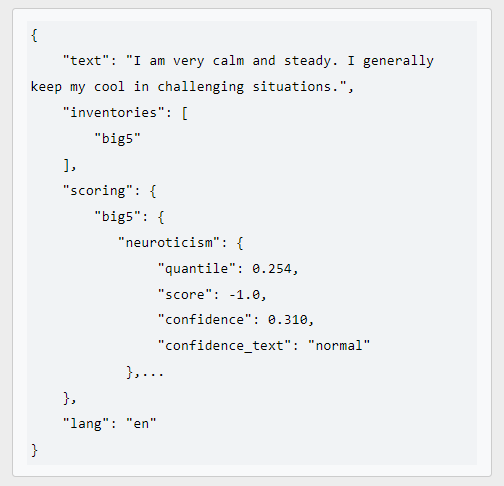

Confidence levels (Fig. 2) are computed based on the calculated scores. If the text contains substantial data related to a specific personality trait, the confidence level associated with that trait will be high. Conversely, if the text lacks relevant information for a particular trait, the confidence level for that trait will be lower.

Fig. 2. Example of Numerical and Text Values for Confidence Returned by Sentino API

This systematic process allows us to extract meaningful insights from textual data, enabling us to identify and quantify the presence of various personality dimensions, traits, and facets in the content. It ensures that our analysis is both data-driven and grounded in the original (golden) dataset and the derived psychological vector space. This sets up a robust framework for understanding the psychological aspects of individuals based on their textual expressions.

4 API in Action – Real-World Scenarios

This section explores real-world applications of the Sentino API, focusing on three distinct scenarios: classical testing, text and interview processing, and chatbot-driven interactions:

- In the classical testing scenario, the API computes personality metrics based on user-provided data.

- The text and interview processing scenario analyzes textual data to extract personality insights.

- The chatbot scenario utilizes SentinoBot to engage with users and collect data for personality assessment.

These scenarios illustrate the versatility of Sentino’s API and AI solutions for personality assessment in practical contexts. However, limitations related to data completeness, NLP model confidence, and specialized applications are acknowledged, emphasizing the need for ongoing data validation, model refinement, and additional training in certain domains.

4.1 Classical Testing

In this scenario, a user provides all the relevant data, namely answers (responses) to all the items of the given inventory. The Sentino API utilizes this classical psychometric approach to compute all metrics associated with the provided data, i.e. computes scores and quantiles. This scenario is ideal for individuals seeking a thorough understanding of their personality traits through established testing methods. The whole process takes even less time and effort if the relevant data, like respondents’ answers to relevant personality inventories, is already available in digital format.

Example:

Big Five Personality Inventory (Fragment) – Question-Answer Pairs

Item | Answer | ||||

| Strongly disagree | Somewhat disagree | Neither disagree nor agree | Somewhat agree | Strongly agree | |

| 1 | 2 | 3 | 4 | 5 | |

| I am imaginative. | X | ||||

| I am highly self-disciplined. | X | ||||

| I am the life of the party. | X | ||||

| I am extremely empathetic. | X | ||||

| I stress out easily. | X | ||||

4.2 Text and Interview Processing

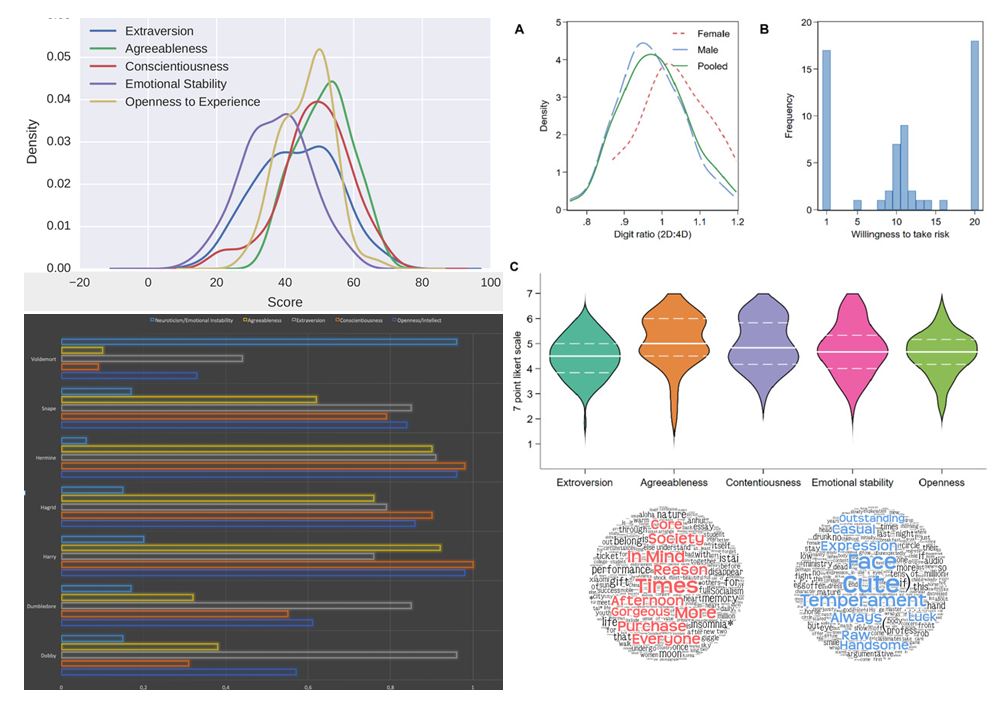

There is a scenario where a user feeds the Sentino API with textual data, such as video/audio interview records or transcripts, written content, or social media posts. The API employs its advanced text and interview processing capabilities to perform the analysis in the previously described manner (see Section 4.1 above). Quantitative results are returned in an easy-to-use infographic form (see Fig. 3 for an example).

Fig. 3. Graphical Representation of Interview Analysis

This scenario enables users to gain insights into personality traits based on their textual expressions, making it valuable for various applications like HR, e-learning, personal development and coaching, dating and more.

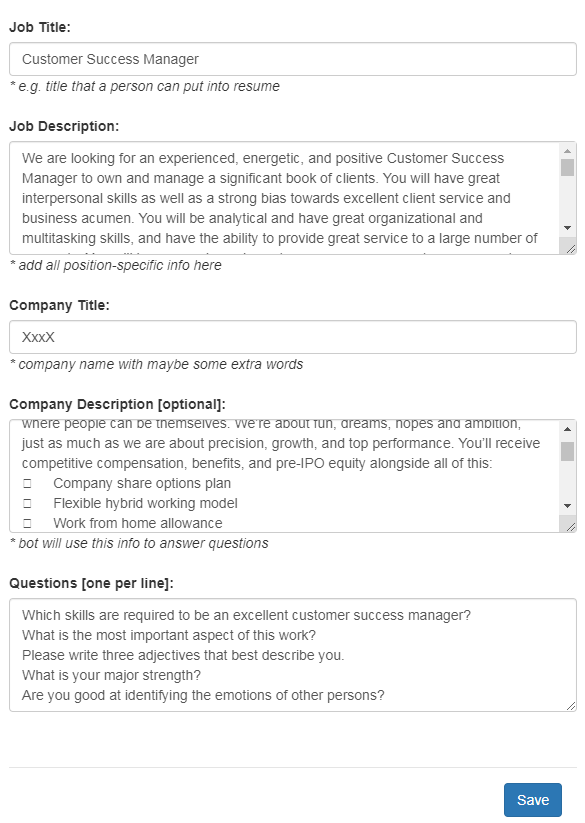

4.3 Chatbot

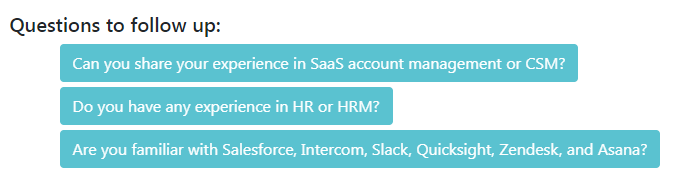

In this scenario, organizations deploy SentinoBot, the AI-powered chatbot solution, to engage with users and collect data for personality assessment. SentinoBot interacts with users through conversation, asking a series of questions (Fig. 4) designed to reveal personality insights.

Fig. 4. Setting SentinoBot for an Interview

Where applicable, it dynamically generates additional or clarifying questions (Fig. 5) to receive a comprehensive personality profile.

Fig. 5. Claryfing Questions Generated by SentinoBot

The collected data is then processed using Sentino’s AI algorithms, providing organizations with valuable insights into individual personality. This scenario streamlines the personality assessment process, making it efficient, interactive, and user-friendly.

The scenarios described above showcase the versatility and applicability of Sentino’s API and AI solutions in real-world contexts, catering to diverse needs for personality assessment and insights.

4.4 Limitations

Related to the Data. While Sentino has access to extensive datasets and employs data augmentation techniques, limitations may arise from the completeness and relevance of the data. To overcome this, ongoing data validation and enrichment processes are essential, involving human checks and continuous updates to ensure the dataset remains accurate and up-to-date.

Related to the NLP Model. The Sentino’s approach relies on semantic understanding. Our API uses highly advanced AI models (Sota Transformers). However, semantic analysis may have confidence limitations in certain cases. There may occasionally occur some challenges in detecting the correct meaning of words, leading to potential uncertainty in the interpretation of text. Overcoming this limitation requires adding more training examples and newest models and keeping up to date with the advancement of NLP technologies.

Related to the Approach. An inherent limitation lies in coreference resolution. It is an important step for a lot of higher level NLP tasks that involve natural language understanding such as document summarization, question answering, and information extraction. In the process of chunking or item extraction, the content of the items may rely on preceding text elements (for example, the preceding phrase “I liked it” within the text can influence the content of subsequent items).

Related to the Application. Sentino’s Model is intentionally designed to serve as the most versatile tool for comprehending the healthy human psyche within its broad and diverse context. We do not delve into the exploration of potential mental health disorders or abnormalities. It’s also important to note that the efficacy of our model might be somewhat constrained in certain highly specialized applications or industries, necessitating additional training for its effective deployment in such scenarios.

Conclusion

In this paper, we have presented Sentino’s methodology for personality assessment, emphasizing the superiority of semantic analysis over lexical analysis in understanding textual content.

We discussed in detail the process of data gathering and augmentation that allowed us to reliably expand the dataset from 5K to 500K psychologically meaningful items.

We also dived into the state-of-the-art NLP stack and explained the training procedure of Transformer-based models allowing us to accurately capture psychological meaning of any unstructured text or interview.

By employing semantic mapping from established references to known items, we consistently maintain the capacity to detect and deeply understand the underlying reasoning behind the decisions made by our algorithm. This ensures a robust foundation for psychological analysis in diverse contexts, advancing the field of personality assessment with enhanced transparency and scientific rigor. Thus we highlight the transparency of our methodology that distances itself from black-box approaches.

We also outlined the common applications of Sentino API, showcasing its versatility across various domains. While acknowledging limitations related to data quality and completeness, as well as the predictive power of our NLP model, we showed that Sentino has brought novelty and made significant advancements in the field of personality assessment, particularly in departing from classical psychometric testing. This innovative approach marks a promising step forward in the intersection of AI and psychology.

References

- Goldberg, L. R. (1992). The development of markers for the Big-Five factor structure. Psychological Assessment, 4(1), 26-42.

- Costa, P. T., & McCrae, R. R. (1992). Revised NEO Personality Inventory (NEO-PI-R) and NEO Five-Factor Inventory (NEO-FFI): Professional manual. Psychological Assessment Resources.

- Lee, K., & Ashton, M. C. (2004). Psychometric properties of the HEXACO Personality Inventory. Multivariate Behavioral Research, 39(2), 329-358.

- Pozzebon, J. A., Ashton, M. C., Lee, K., Visser, B. A., & Bourdage, J. S. (2010). The hierarchical structure of the French Big Five: A test of the higher-order model. Journal of Personality, 78(2), 567-592.

- John, O. P., & Srivastava, S. (1999). The Big Five trait taxonomy: History, measurement, and theoretical perspectives. In L. A. Pervin & O. P. John (Eds.), Handbook of personality: Theory and research (Vol. 2, pp. 102-138). Guilford Press.

- Goldberg, L. R., Johnson, J. A., Eber, H. W., Hogan, R., Ashton, M. C., Cloninger, C. R., & Gough, H. G. (2006). The International Personality Item Pool and the future of public-domain personality measures. Journal of Research in Personality, 40(1), 84-96.

- Revelle, W. (2019). psych: Procedures for Personality and Psychological Research (Version 2.0.9) [Computer software]. https://cran.r-project.org/web/packages/psych/psych.pdf

- Ashton, M. C., Lee, K., & de Vries, R. E. (2014). The HEXACO honesty-humility, agreeableness, and emotionality factors: A review of research and theory. Personality and Social Psychology Review, 18(2), 139-152.

- Schneider, T. R., Lyons, J. B., & Khazon, S. (2013). Emotional intelligence and resilience. Personality and Individual Differences, 55(8), 909-914.

- McCrae, R. R., & Costa, P. T. (2003). Personality in adulthood: A five-factor theory perspective. Guilford Press.